Back around the end of 2009, EMC released the first promising step towards automated tiering Nirvana within their storage platforms. And while it was a step in the right direction, that main drawback at least in the CLARiiON and Celerra arrays was that data had to be tiered at the LUN level…meaning that the whole LUN had to be moved between tiers. If you were planning to add Enterprise Flash Drives to take advantage of denser I/O, it made it difficult to do so at a realistic cost per I/O.

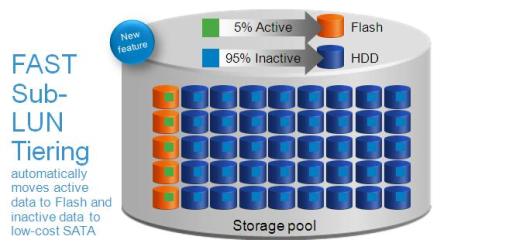

With the advent of FLARE 30, EMC has addressed the challenges around automated storage tiering within their mid-range storage platforms by releasing FASTv2. FASTv2 allows for tiering of storage at the sub-LUN level, actually in 1GB chunks, meaning that an individual LUN can be spread across multiple types of storage, i.e. SATA, FC, and EFD. This ensures that only the most active blocks of a LUN will reside on Enterprise Flash Drives thus enabling a lot more efficient use of all storage types. The bottom line is as a customer you will get a lot more mileage out of an incremental investment in Flash storage.

So now that we know the capabilities of FAST at a high level…what about that other new feature called FAST Cache? Well, both features make efficient use of Flash drives, but that’s where the similarities end. FAST Cache simply allows for Enterprise Flash Drives to be added to the array and used to extend the cache capacity for both reads and writes, which translates into better system-wide performance.

So now that we know the capabilities of FAST at a high level…what about that other new feature called FAST Cache? Well, both features make efficient use of Flash drives, but that’s where the similarities end. FAST Cache simply allows for Enterprise Flash Drives to be added to the array and used to extend the cache capacity for both reads and writes, which translates into better system-wide performance.

If your trying to figure out what makes the most sense for your environment…the bottom line is that if you are looking for an immediate, array-wide increase in performance than FAST Cache is the best place to start. FAST Cache allows for a small investment in Flash drives, as little as 2 drives for CLARiiON and Celerra arrays, to realize a big jump in performance. And while it is beneficial for most all workloads, the key to remember is that it is locality of reference based. This means that FAST Cache will bring the most benefit to workloads which tend to hit the same area of disks multiple times, hence they have a high locality of reference. Some examples of applications which tend to have a high locality of reference are OLTP-based, file-based, and VMware View to name a few. You can certainly work with your VAR, hopefully Varrow :-), to gather performance data against your array, specifically the Cache Miss Rate metric, and therefore show where the addition of FAST Cache will provide the most benefit.

If your trying to figure out what makes the most sense for your environment…the bottom line is that if you are looking for an immediate, array-wide increase in performance than FAST Cache is the best place to start. FAST Cache allows for a small investment in Flash drives, as little as 2 drives for CLARiiON and Celerra arrays, to realize a big jump in performance. And while it is beneficial for most all workloads, the key to remember is that it is locality of reference based. This means that FAST Cache will bring the most benefit to workloads which tend to hit the same area of disks multiple times, hence they have a high locality of reference. Some examples of applications which tend to have a high locality of reference are OLTP-based, file-based, and VMware View to name a few. You can certainly work with your VAR, hopefully Varrow :-), to gather performance data against your array, specifically the Cache Miss Rate metric, and therefore show where the addition of FAST Cache will provide the most benefit.

FAST tiering can still be utilized in an array that has FAST Cache enabled, but it’s focus will be on increasing the performance of specific data sets and specifically sustained access while lowering the TCO for the storage required to house said data. In comparison,FAST Cache will allow for an immediate boost in performance for burst-prone workloads. So the answer is really that the 2 technologies work together to increase the performance of storage in your environment while lowering the overall Total Cost of Ownership.

[…] are FAST (Fully Automated Storage Tiering) and FAST Cache, which Matt compares and contrasts here. If you are interested in EMC’s new FAST (Fully Automated Storage Tiering) there are a few […]

[…] vCenter and VDI: Protecting the Heart of It All EMC FAST Tiering and FAST Cache: What’s the Difference and How to Choose? […]